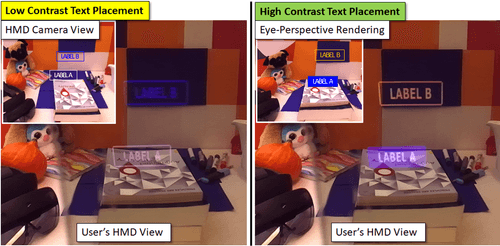

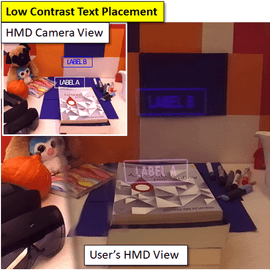

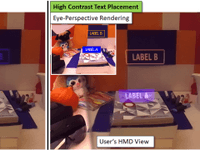

With the rise of advanced mobile optical see-through head-mounted displays (e.g., Microsoft Hololens 2), Augmented Reality (AR) becomes increasingly relevant for practical practical use cases, where the real world is augmented with supporting information. However, due to the semi-transparent displays of state-of-the-art OST HMDs, virtual augmentations suffer from low contrast to the real world which in turn leads to poor comprehensibility. The used semi-transparent displays cannot block environmental light of the user’s surroundings. Therefore, the display color mixes with the background color of the real world, which leads to low contrast and, thus, incomprehensible AR visualization. Furthermore, the inherently mobile nature of OST HMDs leads to frequent changes in the background color causing unpredictable visualization outcomes. While these issues are limiting, if workers cannot perceive instructions properly, the situation becomes dangerous, when safety instructions are not sufficiently salient, and machines or equipment are not handled according to specifications. This project is a first step towards solving these fundamental issues. We investigate novel adaptive visualizations for AR that automatically adapt virtual representations in real-time to improve contrast to the current real-world background and, thus, ensure their comprehensibility.

Adaptive Visualization for Augmented Reality

Project Description

Additional Information

Funded by the Austrian Research Promotion Ageny (FFG), grant number 877104

Project Partners

Images

Publications

Towards Eye-Perspective Rendering for Optical See-Through Head-Mounted Displays (2022)

inproceeding

Eye-Perspective View Management for Optical See-Through Head-Mounted Displays (2023)

inproceeding